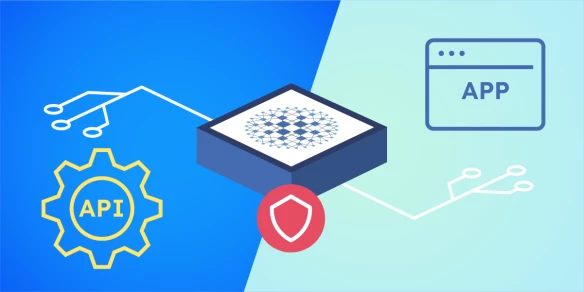

HAProxy Fusion: New External Load Balancing & Multi-Cluster Routing Features

HAProxy Fusion Control Plane enables service discovery in any Kubernetes or Consul environment without complex workarounds. Harness powerful external load balancing, multi-cluster routing, and more.